In recent years, neural network based approaches (i.e. deep learning) have been the main models for state-of-the-art systems in natural language processing, whether that is in machine translation, natural language inference, language modeling or sentiment analysis. At the same time researchers have asked themselves what kind of linguistic information these neural networks are able to capture. Answering this question is not a trivial undertaking: state-of-the-art model’s are usually multiple layers deep with non-linear transformations learned through billions of mathematical operations. The benchmarks or downstream tasks used to assess the performance of these models, however, “require complex forms of inference, making it difficult to pinpoint the information a model is relying upon” (Conneau et. al, 2018).

Probing tasks, which have also been referred to as diagnostic classifiers, auxiliary classifier or decoding, is when you use the encoded representations of one system to train another classifier on some other (probing) task of interest. The probing task is designed in such a way to isolate some linguistic phenomena and if the probing classifier performs well on the probing task we infer that the system has encoded the linguistic phenomena in question.

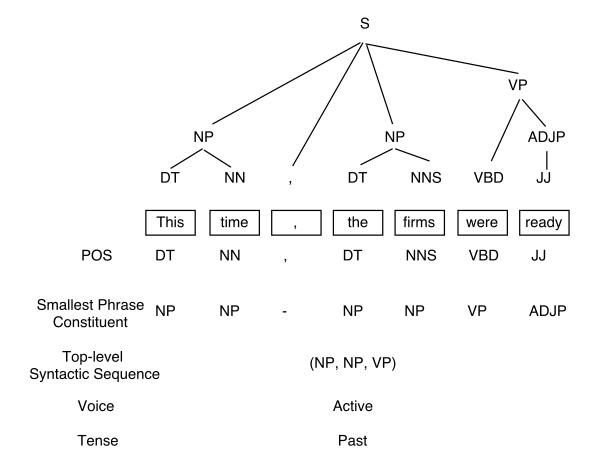

An early usage of probing tasks can be found in Shi et. al (2016) Does String-Based Neural MT Learn Source Syntax? There they trained two NMT models (English -> French and English -> German) and then used a separate corpus of 10K sentences annotated with five syntactic labels (see image below).

Using the NMT models, they convert the sentences to encoded representations and use them to train a logistic regression model for each syntactic label. Looking at the results of these probing classifiers, the authors conclude that “both local and global syntactic information about source sentences is captured by the encoder” and that “Different types of syntax is stored in different layers, with different concentration degrees.” This illustrates the power that probing tasks can have in explaining what kind of linguistic information and how it is captured in neural network based architectures.

More recently, more generic probing task frameworks have been proposed to assess a variety of linguistic phenomena and neural network. Conneau et. al (2018), introduced 10 probing tasks as a part of SentEval to evaluate the linguistic capabilities of sentence embeddings, Şahin et. al, 2019, introduced 15 probing tasks of word representations for 24 languages and Kim et. al 9 probing tasks for function words.

What role probing tasks and new probing frameworks will have in evaluating NLP systems in the future remains to be seen. However, the sort of insights they are able to give into NLP systems seems promising.

References

Conneau, A., Kruszewski, G., Lample, G., Barrault, L., & Baroni, M. (2018). What you can cram into a single vector: Probing sentence embeddings for linguistic properties. ACL.

Shi, X., Padhi, I., & Knight, K. (2016, November). Does string-based neural MT learn source syntax?. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing (pp. 1526-1534).

Şahin, G. G., Vania, C., Kuznetsov, I., & Gurevych, I. (2019). LINSPECTOR: Multilingual Probing Tasks for Word Representations. ACL.

Kim, N., Patel, R., Poliak, A., Wang, A., Xia, P., McCoy, R. T., ... & Bowman, S. R. (2019). Probing What Different NLP Tasks Teach Machines about Function Word Comprehension. ACL.